Apache Spark is a free and open-source analytics engine used for executing data engineering, data science, and machine learning on a single server or cluster. It is a distributed processing system that utilizes in-memory caching to perform processing tasks on very large data sets. Some of the key features of Apache Spark are shown below.

- Fault tolerance.

- Dynamic In Nature.

- Lazy Evaluation.

- Real-Time Stream Processing.

- Speed.

- Reusability.

- Advanced Analytics.

- In Memory Computing.

This post will show you how to install Apache Spark on Fedora.

Step 1 – Install Java JDK

Apache Spark is written in Java, so Java JDK must be installed on your server. You can install it with the following command.

dnf install java-11-openjdk-devel -y

Once Java is installed, you can verify the Java installation using the following command.

java --version

Output:

openjdk 11.0.15 2022-04-19 OpenJDK Runtime Environment 18.9 (build 11.0.15+10) OpenJDK 64-Bit Server VM 18.9 (build 11.0.15+10, mixed mode, sharing)

Step 2 – Install Apache Spark

First, download the latest version of Apache Spark using the wget command.

wget https://dlcdn.apache.org/spark/spark-3.4.1/spark-3.4.1-bin-hadoop3.tgz

After the successful download, extract the downloaded file with the following command.

tar -xvf spark-3.4.1-bin-hadoop3.tgz

Next, move the extracted directory to the /opt directory.

mv spark-3.4.1-bin-hadoop3 /opt/spark

Next, add a user to run Spark then set the ownership of the Spark directory.

useradd spark chown -R spark:spark /opt/spark

Step 3 – Create a Systemd Service File

Next, create a systemd service file to manage the Spark master service.

nano /etc/systemd/system/spark-master.service

Add the following configurations.

[Unit] Description=Apache Spark Master After=network.target [Service] Type=forking User=spark Group=spark ExecStart=/opt/spark/sbin/start-master.sh ExecStop=/opt/spark/sbin/stop-master.sh [Install] WantedBy=multi-user.target

Save and close the file, then create a service file for Spark slave.

nano /etc/systemd/system/spark-slave.service

Add the following configurations.

[Unit] Description=Apache Spark Slave After=network.target [Service] Type=forking User=spark Group=spark ExecStart=/opt/spark/sbin/start-slave.sh spark://your-server-ip:7077 ExecStop=/opt/spark/sbin/stop-slave.sh [Install] WantedBy=multi-user.target

Save and close the file, then reload the systemd daemon.

systemctl daemon-reload

Next, start and enable the Spark master service.

systemctl start spark-master systemctl enable spark-master

You can check the Spark master status using the following command.

systemctl status spark-master

Output:

● spark-master.service - Apache Spark Master

Loaded: loaded (/etc/systemd/system/spark-master.service; disabled; vendor preset: disabled)

Active: active (running) since Sat 2023-06-24 04:26:29 EDT; 4s ago

Process: 16172 ExecStart=/opt/spark/sbin/start-master.sh (code=exited, status=0/SUCCESS)

Main PID: 16184 (java)

Tasks: 34 (limit: 9497)

Memory: 209.8M

CPU: 17.803s

CGroup: /system.slice/spark-master.service

└─16184 /usr/lib/jvm/java-11-openjdk-11.0.15.0.10-1.fc34.x86_64/bin/java -cp /opt/spark/conf/:/opt/spark/jars/* -Xmx1g org.apache.spark.deploy.master.Mast>

Jun 24 04:26:25 fedora systemd[1]: Starting Apache Spark Master...

Jun 24 04:26:25 fedora start-master.sh[16178]: starting org.apache.spark.deploy.master.Master, logging to /opt/spark/logs/spark-spark-org.apache.spark.deploy.master.Ma>

Jun 24 04:26:29 fedora systemd[1]: Started Apache Spark Master.

Step 4 – Access Spark Web Interface

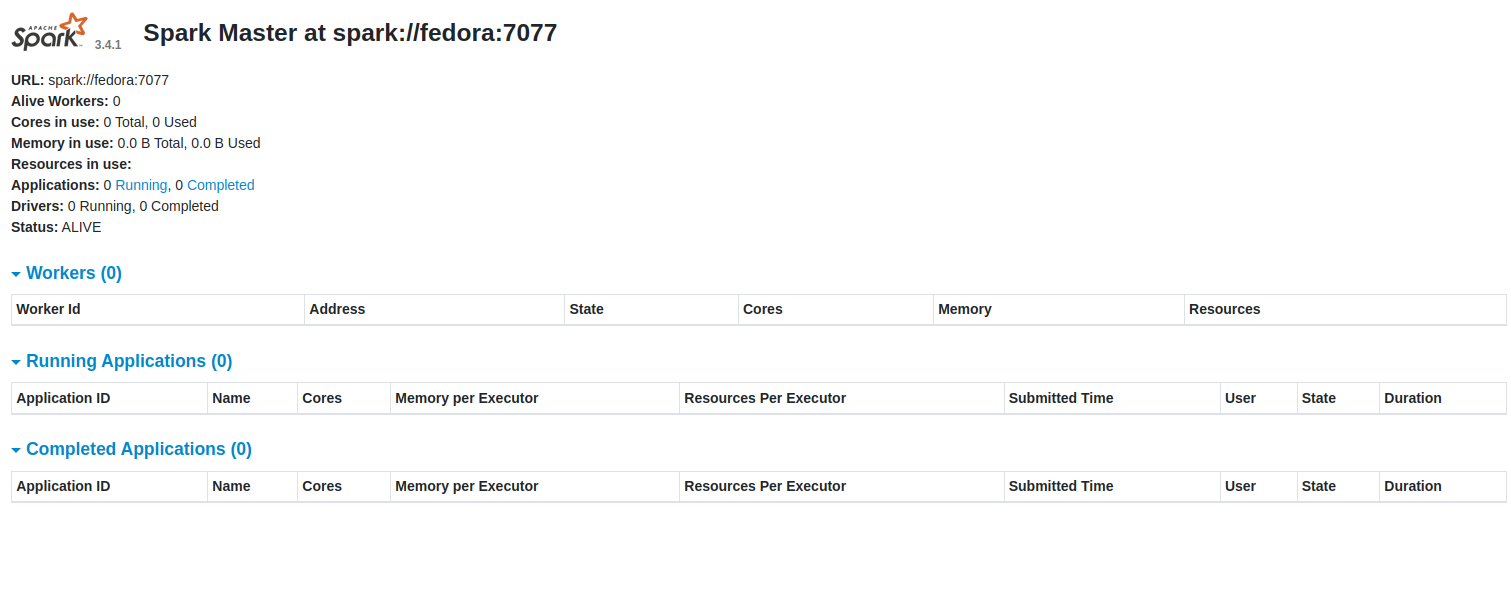

At this point, Spark is installed and running on port 8080. Now, open your web browser and access Apache Spark using the URL http://your-server-ip:8080. You should see the Spark dashboard on the following screen.

Now, start and enable the Spark slave service with the following command.

systemctl start spark-slave systemctl enable spark-slave

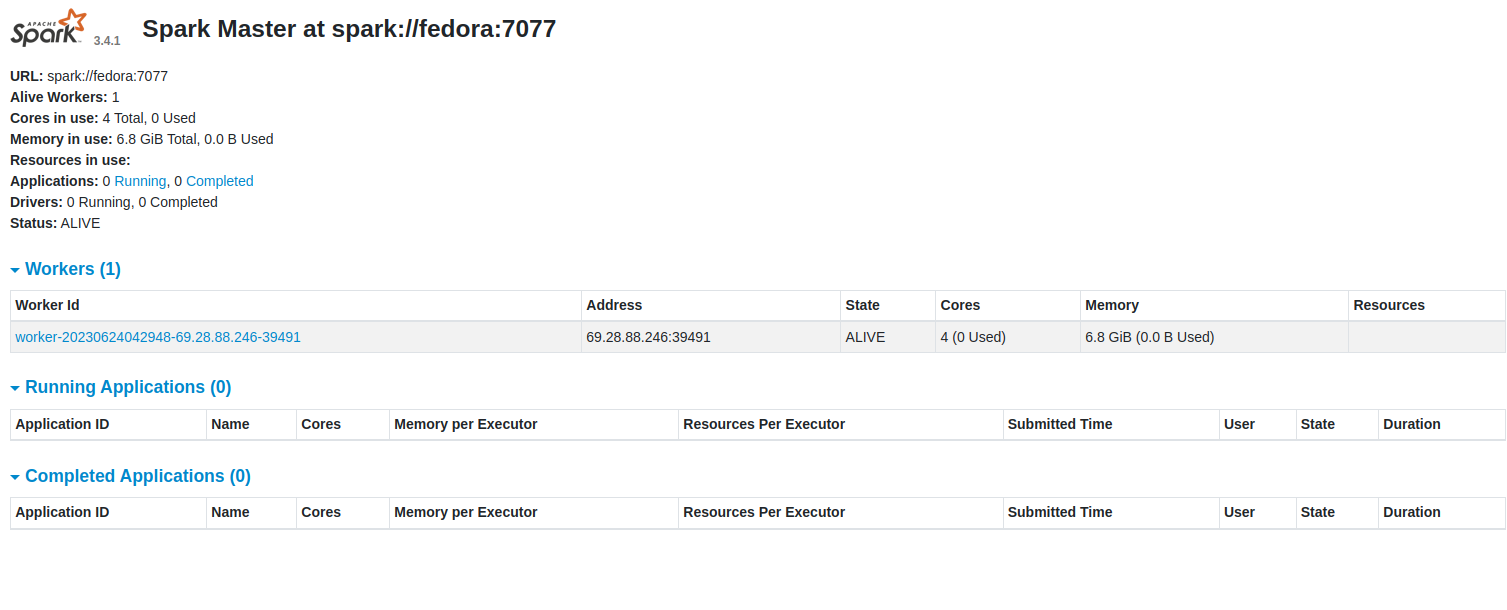

Now, go back to the Spark web interface and reload the page. You should see that a new worker node has been added to the Spark.

Conclusion

In this tutorial, you learned how to install Apache Spark on Fedora. You can now start creating your machine learning and data science project and deploy it using Apache Spark. You can now deploy Apache Spark on dedicated server hosting from Atlantic.Net!