Table of Contents

- Prerequisites

- Step 1: Install Necessary Packages

- Step 2: Set Up Python Environment

- Step 3: Install Python Libraries

- Step 4: Create the Python Script

- Step 5: Initialize the AI Pipeline

- Step 6: Define the Video Prompt and Parameters

- Step 7: Generate and Save Frames

- Step 8: Compile the Video

- Step 9: Execute the Script

- Conclusion

In the ever-evolving digital media landscape, transforming text into captivating video content is a highly sought-after skill. With the advent of powerful GPU servers, such as those offered by Atlantic.Net, this task has become more accessible.

This guide will walk you through setting up and utilizing an Atlantic.Net GPU server to create videos based on text inputs. By leveraging the NVIDIA A100 GPU’s robust capabilities, users can create high-quality videos quickly and efficiently.

Prerequisites

Before proceeding, ensure you have the following:

- An Atlantic.Net Cloud GPU server running Ubuntu 24.04, equipped with an NVIDIA A100 GPU with at least 20 GB of GPU RAM.

- CUDA Toolkit and cuDNN Installed.

- Root or sudo privileges.

Step 1: Install Necessary Packages

Begin by updating your system’s package list and installing Python, FFmpeg, and Git.

apt update -y

apt install python3-full python3-virtualenv ffmpeg git -yStep 2: Set Up Python Environment

Create and activate a virtual environment that isolates your Python setup and prevents conflicts between project dependencies.

Let’s create a virtual environment for your project.

python3 -m venv text2video-envNext, activate the virtual environment.

source text2video-env/bin/activateStep 3: Install Python Libraries

Install the required Python libraries, including PyTorch, for neural networks.

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118Install additional libraries for AI-driven image generation and video processing.

pip install diffusers transformers accelerate scipy tqdm opencv-python einops moviepyStep 4: Create the Python Script

Write a Python script named text2video_stablediffusion.py. This script will use AI models to generate video frames from text. Start by importing necessary libraries and initializing key variables:

nano text2video_stablediffusion.pyImport the necessary modules.

import os

import subprocess

import torch

from diffusers import StableDiffusionPipelineStep 5: Initialize the AI Pipeline

Set up the StableDiffusionPipeline, which is essential for transforming text into images. This pipeline is loaded with a pre-trained model optimized for video frame generation:

def main():

# Initialize pipeline

pipe = StableDiffusionPipeline.from_pretrained(

"CompVis/stable-diffusion-v1-4",

torch_dtype=torch.float16

)

pipe.to("cuda")Step 6: Define the Video Prompt and Parameters

Configure your video by setting the prompt, the number of frames, and the frames per second (FPS):

# Define your prompt

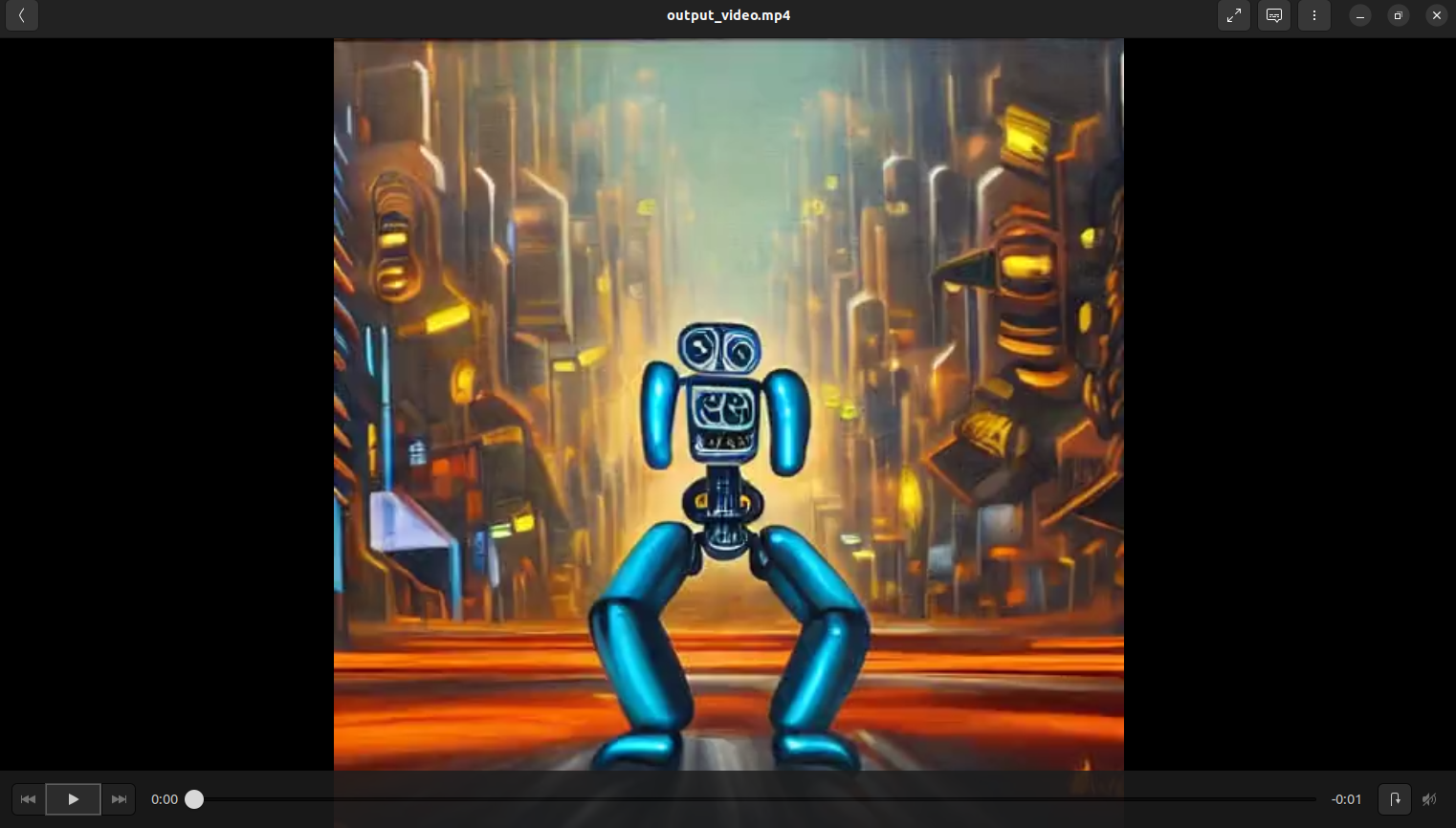

prompt = "A surreal painting of a dancing robot in a futuristic city."

print(f"Generating frames for prompt: {prompt}")

# Directory to store the generated frames

frames_folder = "generated_frames"

os.makedirs(frames_folder, exist_ok=True)

# Number of frames and fps

num_frames = 30 # e.g., for 30 frames at 1 second of video

fps = 30Step 7: Generate and Save Frames

Use the AI model to generate each frame based on the text prompt, modifying the seed for each frame to ensure variation yet maintaining thematic consistency.

# Generate frames

for i in range(num_frames):

seed = 42 + i

generator = torch.Generator("cuda").manual_seed(seed)

# Generate one image

image = pipe(prompt, guidance_scale=7.5, generator=generator).images[0]

# Save frame

frame_path = os.path.join(frames_folder, f"frame_{i:03d}.png")

image.save(frame_path)

# Stitch frames into a video using ffmpeg

video_filename = "output_video.mp4"

print(f"Combining frames into {video_filename} at {fps} FPS...")Step 8: Compile the Video

After generating the frames, compile them into a single video file using FFmpeg. This step stitches the images into a flowing video sequence.

subprocess.run([

"ffmpeg",

"-framerate", str(fps),

"-i", os.path.join(frames_folder, "frame_%03d.png"),

"-c:v", "libx264",

"-pix_fmt", "yuv420p",

video_filename

])

print("Video generation complete.")

if __name__ == "__main__":

main()Save and close the file.

Step 9: Execute the Script

Run the script to generate the video.

python3 text2video_stablediffusion.pyThis script will generate a video from the text prompt and save it to the file output_video.mp4.

[libx264 @ 0x55cf716f6a40] using cpu capabilities: MMX2 SSE2Fast SSSE3 SSE4.2 AVX FMA3 BMI2 AVX2

[libx264 @ 0x55cf716f6a40] profile High, level 3.0, 4:2:0, 8-bit

[libx264 @ 0x55cf716f6a40] 264 - core 164 r3108 31e19f9 - H.264/MPEG-4 AVC codec - Copyleft 2003-2023 - http://www.videolan.org/x264.html - options: cabac=1 ref=3 deblock=1:0:0 analyse=0x3:0x113 me=hex subme=7 psy=1 psy_rd=1.00:0.00 mixed_ref=1 me_range=16 chroma_me=1 trellis=1 8x8dct=1 cqm=0 deadzone=21,11 fast_pskip=1 chroma_qp_offset=-2 threads=4 lookahead_threads=1 sliced_threads=0 nr=0 decimate=1 interlaced=0 bluray_compat=0 constrained_intra=0 bframes=3 b_pyramid=2 b_adapt=1 b_bias=0 direct=1 weightb=1 open_gop=0 weightp=2 keyint=250 keyint_min=25 scenecut=40 intra_refresh=0 rc_lookahead=40 rc=crf mbtree=1 crf=23.0 qcomp=0.60 qpmin=0 qpmax=69 qpstep=4 ip_ratio=1.40 aq=1:1.00

Output #0, mp4, to 'output_video.mp4':

Metadata:

encoder : Lavf60.16.100

Stream #0:0: Video: h264 (avc1 / 0x31637661), yuv420p(tv, progressive), 512x512, q=2-31, 30 fps, 15360 tbn

Metadata:

encoder : Lavc60.31.102 libx264

Side data:

cpb: bitrate max/min/avg: 0/0/0 buffer size: 0 vbv_delay: N/A

[out#0/mp4 @ 0x55cf716f5cc0] video:607kB audio:0kB subtitle:0kB other streams:0kB global headers:0kB muxing overhead: 0.158672%

frame= 30 fps=0.0 q=-1.0 Lsize= 608kB time=00:00:00.90 bitrate=5538.0kbits/s speed=1.84x

[libx264 @ 0x55cf716f6a40] frame I:2 Avg QP:29.58 size: 20441

[libx264 @ 0x55cf716f6a40] frame P:28 Avg QP:30.96 size: 20731

[libx264 @ 0x55cf716f6a40] mb I I16..4: 6.6% 51.2% 42.2%

[libx264 @ 0x55cf716f6a40] mb P I16..4: 7.1% 51.5% 41.2% P16..4: 0.1% 0.1% 0.0% 0.0% 0.0% skip: 0.0%

[libx264 @ 0x55cf716f6a40] 8x8 transform intra:51.6% inter:79.0%

[libx264 @ 0x55cf716f6a40] coded y,uvDC,uvAC intra: 78.5% 95.0% 77.2% inter: 81.0% 100.0% 59.7%

[libx264 @ 0x55cf716f6a40] i16 v,h,dc,p: 32% 28% 3% 37%

[libx264 @ 0x55cf716f6a40] i8 v,h,dc,ddl,ddr,vr,hd,vl,hu: 28% 22% 10% 6% 5% 6% 7% 7% 9%

[libx264 @ 0x55cf716f6a40] i4 v,h,dc,ddl,ddr,vr,hd,vl,hu: 39% 21% 8% 5% 6% 6% 6% 5% 4%

[libx264 @ 0x55cf716f6a40] i8c dc,h,v,p: 33% 23% 32% 12%

[libx264 @ 0x55cf716f6a40] Weighted P-Frames: Y:3.6% UV:3.6%

[libx264 @ 0x55cf716f6a40] ref P L0: 26.6% 19.4% 22.6% 29.8% 1.6%

[libx264 @ 0x55cf716f6a40] kb/s:4970.80

Video generation complete.Download the output_video.mp4 file to your local desktop machine and double-click on it to play the video.

Conclusion

Using Atlantic.Net’s GPU servers for text-to-video generation harnesses cutting-edge AI technology to transform simple text descriptions into dynamic visual stories. This process enhances the creative possibilities and streamlines content creation across various digital media platforms. Whether for business or personal projects, integrating text-to-video technology offers a powerful tool for innovative and engaging digital content creation.