This guide outlines the process of setting up and connecting to a local Weaviate instance from an Atlantic.Net Cloud server using Docker for containerization and Ollama for the embedding and generative models.

Weaviate is an open-source vector database that enables searches based on similarity. It is popular with Retrieval Augmented Generation (RAG).

Prerequisites

- An active Atlantic.Net account with a deployed cloud instance

- We recommend selecting a cloud instance with ample resources (at least 8GB RAM, preferably 16GB or more) to accommodate Weaviate and the language models.

- SSH access to your cloud server.

Part 1: Prepare the Cloud Platform Environment

Step 1 – Update Ubuntu

- Update System Packages:

sudo apt update && sudo apt upgrade -y

Step 2 – Install Docker

- Install Docker:

Install the following packages to allow apt to use a repository over HTTPS:

sudo apt install -y ca-certificates curl gnupg lsb-release

- Add Docker’s Official GPG Key

Download and add Docker’s official GPG key:

sudo mkdir -p /etc/apt/keyrings curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /etc/apt/keyrings/docker.gpg

- Set Up the Docker Repository

Add the Docker repository to APT sources:

echo "deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

- Update the Package List Again

Update the package list to include Docker packages from the new repository:

sudo apt update -y

- Install Docker Engine

Install the latest version of Docker Engine, containerd, and Docker Compose:

sudo apt install -y docker-ce docker-ce-cli containerd.io docker-compose-plugin

- Verify the Installation

Check that Docker is installed correctly by running the hello-world image:

sudo docker run hello-world

You should see output like this:

root@Weaviate:~# sudo docker run hello-world Unable to find image 'hello-world:latest' locally latest: Pulling from library/hello-world c1ec31eb5944: Pull complete Digest: sha256:d211f485f2dd1dee407a80973c8f129f00d54604d2c90732e8e320e5038a0348 Status: Downloaded newer image for hello-world:latest Hello from Docker! This message shows that your installation appears to be working correctly. 1. The Docker client contacted the Docker daemon. 2. The Docker daemon pulled the "hello-world" image from the Docker Hub. (amd64) To generate this message, Docker took the following steps: 3. The Docker daemon created a new container from that image which runs the executable that produces the output you are currently reading. 4. The Docker daemon streamed that output to the Docker client, which sent it to your terminal. To try something more ambitious, you can run an Ubuntu container with: $ docker run -it ubuntu bash Share images, automate workflows, and more with a free Docker ID: https://hub.docker.com/ For more examples and ideas, visit: https://docs.docker.com/get-started/ root@Weaviate:~#

- Add Your User to the docker Group (Optional)

To run Docker commands without sudo, add your user to the docker group:

sudo usermod -aG docker $USER

Step 3 – Set Up Weaviate in Docker

Weaviate works very well within a Docker environment. We will us Docker Compose to create the Weaviate Database.

- Create docker-compose.yml

nano docker-compose.yml

- Use the Provided docker-compose.yml Content (Source: Weaviate Website)

--- services: weaviate: command: - --host - 0.0.0.0 - --port - '8080' - --scheme - http image: cr.weaviate.io/semitechnologies/weaviate:1.27.2 ports: - 8080:8080 - 50051:50051 volumes: - weaviate_data:/var/lib/weaviate restart: on-failure:0 environment: QUERY_DEFAULTS_LIMIT: 25 AUTHENTICATION_ANONYMOUS_ACCESS_ENABLED: 'true' PERSISTENCE_DATA_PATH: '/var/lib/weaviate' DEFAULT_VECTORIZER_MODULE: 'none' ENABLE_API_BASED_MODULES: 'true' CLUSTER_HOSTNAME: 'node1' ENABLE_API_BASED_MODULES: 'true' volumes: weaviate_data: ...

- Start Weaviate

Now start Weaviate using the docker-compose up detached command.

docker compose up -d

You will see output like this:

root@Weaviate:~# docker compose up -d [+] Running 8/8 ✔ weaviate Pulled 7.0s ✔ 43c4264eed91 Pull complete 0.8s ✔ 45d7c130d6ea Pull complete 1.0s ✔ db001b655dd0 Pull complete 3.3s ✔ 1eb7452f9739 Pull complete 3.3s ✔ 2777e559ecf5 Pull complete 4.0s ✔ add3a9abe015 Pull complete 4.3s ✔ 088f4f300a62 Pull complete 4.3s [+] Running 3/3 ✔ Network root_default Created 0.1s ✔ Volume "root_weaviate_data" C... 0.0s ✔ Container root-weaviate-1 Sta... 0.7s root@Weaviate:~#

Step 4 – Install Ollama

Now, let’s install Ollama, a powerful open-source large language model. It is a requirement of Weaviate. This is a self-installable script.

curl -fsSL https://ollama.ai/install.sh | bash

Ollama is a large application that will take a few minutes to download and install.

You should see output like this:

root@Weaviate:~# curl -fsSL https://ollama.ai/install.sh | bash >>> Installing ollama to /usr/local >>> Downloading Linux amd64 bundle ########################################################## 100.0% >>> Creating ollama user... >>> Adding ollama user to render group... >>> Adding ollama user to video group... >>> Adding current user to ollama group... >>> Creating ollama systemd service... >>> Enabling and starting ollama service... Created symlink /etc/systemd/system/default.target.wants/ollama.service → /etc/systemd/system/ollama.service. >>> The Ollama API is now available at 127.0.0.1:11434. >>> Install complete. Run "ollama" from the command line. WARNING: No NVIDIA/AMD GPU detected. Ollama will run in CPU-only mode.

- Download Ollama Models

Now we need to download Ollama language models. These are open-source pre-trained LLMs.

First, pull nomic-embed-text

ollama pull nomic-embed-text

You should see output like this:

root@Weaviate:~# ollama pull nomic-embed-text pulling manifest pulling 970aa74c0a90... 100% ▕████████▏ 274 MB pulling c71d239df917... 100% ▕████████▏ 11 KB pulling ce4a164fc046... 100% ▕████████▏ 17 B pulling 31df23ea7daa... 100% ▕████████▏ 420 B verifying sha256 digest writing manifest success root@Weaviate:~#

Next pull llama3.2. Note that this file is over 2GB and will take a while to download and install.

ollama pull llama3.2

You should see output like this:

root@Weaviate:~# ollama pull llama3.2 pulling manifest pulling dde5aa3fc5ff... 100% ▕████████▏ 2.0 GB pulling 966de95ca8a6... 100% ▕████████▏ 1.4 KB pulling fcc5a6bec9da... 100% ▕████████▏ 7.7 KB pulling a70ff7e570d9... 100% ▕████████▏ 6.0 KB pulling 56bb8bd477a5... 100% ▕████████▏ 96 B pulling 34bb5ab01051... 100% ▕████████▏ 561 B verifying sha256 digest writing manifest success

Part 2 – Install Python & Jupyter Notebooks

There are several ways you can use Weaviate, but you must use it with an SDK. This procedure will follow the steps to use Python, but please be aware that Weaviate supports Java and NodeJS if that is your preferred SDK.

Step 1 – Install Python

Jupyter Lab requires Python. You can install it using the following commands:

apt install python3 python3-pip -y

Note: You may be prompted to restart services after installation. Just click <ok>.

Next, install the Python virtual environment package.

pip install -U virtualenv

Step 2 – Install Jupyter Lab

Now, install Jupyter Lab using the pip command.

pip3 install jupyterlab

This command installs Jupyter Lab and its dependencies. Next, edit the .bashrc file.

nano ~/.bashrc

Define your Jupyter Lab path as shown below; simply add it to the bottom of the file:

export PATH=$PATH:~/.local/bin/

Reload the changes using the following command.

source ~/.bashrc

Next, test run the Jupyter Lab locally using the following command to make sure everything starts.

jupyter lab --allow-root --ip=0.0.0.0 --no-browser

Check the output to make sure there are no errors. Upon success, you will see the following output.

[C 2023-12-05 15:09:31.378 ServerApp] To access the server, open this file in a browser: http://ubuntu:8888/lab?token=aa67d76764b56c5558d876e56709be27446 http://127.0.0.1:8888/lab?token=aa67d76764b56c5558d876e56709be27446

Press the CTRL+C to stop the server.

Step 3 – Configure Jupyter Lab

By default, Jupyter Lab doesn’t require a password to access the web interface. To secure Jupyter Lab, generate the Jupyter Lab configuration using the following command.

jupyter-lab --generate-config

Output.

Writing default config to: /root/.jupyter/jupyter_lab_config.py

Next, set the Jupyter Lab password.

jupyter-lab password

Set your password as shown below:

Enter password: Verify password: [JupyterPasswordApp] Wrote hashed password to /root/.jupyter/jupyter_server_config.json

You can verify your hashed password using the following command.

cat /root/.jupyter/jupyter_server_config.json

Output.

{

"IdentityProvider": {

"hashed_password": "argon2:$argon2id$v=19$m=10240,t=10,p=8$zf0ZE2UkNLJK39l8dfdgHA$0qIAAnKiX1EgzFBbo4yp8TgX/G5GrEsV29yjHVUDHiQ"

}

}

Note this inano /root/.jupyter/jupyter_lab_config.pynformation, as you will need to add it to your config.

Next, edit the Jupyter Lab configuration file.

Define your server IP, hashed password, and other configurations as shown below:

c.ServerApp.ip = ‘your-server-ip’

c.ServerApp.open_browser = False

c.ServerApp.password = ‘argon2:$argon2id$v=19$m=10240,t=10,p=8$zf0ZE2UkNLJK39l8dfdgHA$0qIAAnKiX1EgzFBbo4yp8TgX/G5GrEsV29yjHVUDHiQ’

c.ServerApp.port = 8888

Make sure you format the file exactly as above. For example, the port number is not in brackets, and the False boolean must have a capital F.

Save and close the file when you are done.

Step 4 – Create a Systemctl Service File

Next, create a systemd service file to manage Jupyter Lab.

nano /etc/systemd/system/jupyter-lab.service

Add the following configuration:

[Service] Type=simple PIDFile=/run/jupyter.pid WorkingDirectory=/root/ ExecStart=/usr/local/bin/jupyter lab --config=/root/.jupyter/jupyter_lab_config.py --allow-root User=root Group=root Restart=always RestartSec=10 [Install] WantedBy=multi-user.target

Save and close the file, then reload the systemd daemon.

systemctl daemon-reload

Next, start the Jupyter Lab service using the following command.

systemctl start jupyter-lab

You can now check the status of the Jupyter Lab service using the following command.

systemctl status jupyter-lab

Jupyter Lab is now starting and listening on port 8888. You can verify it with the following command.

ss -antpl | grep jupyter

Output.

LISTEN 0 128 104.219.55.40:8888 0.0.0.0:* users:(("jupyter-lab",pid=156299,fd=6))

Step 5 – Access Jupyter Lab

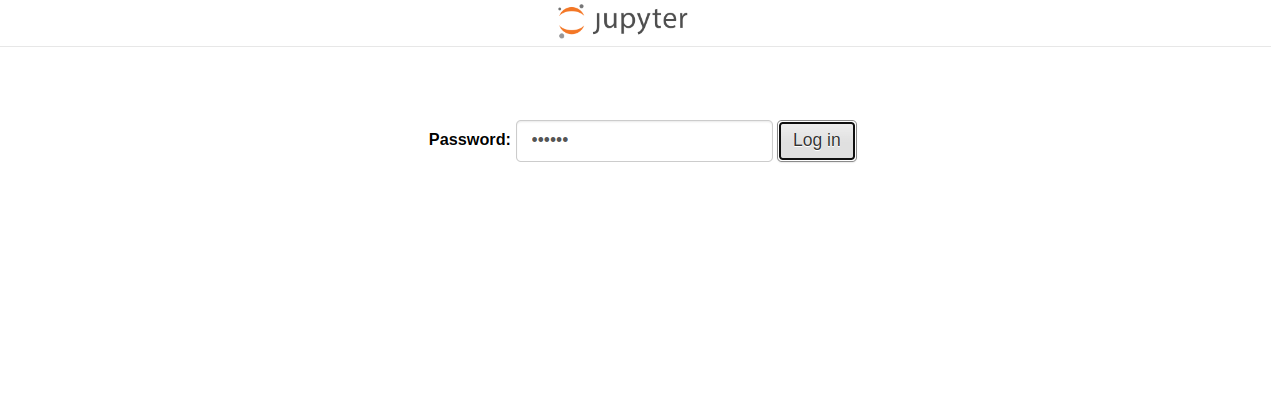

Now, open your web browser and access the Jupyter Lab web interface using the URL http://your-server-ip:8888. You will see Jupyter Lab on the following screen:

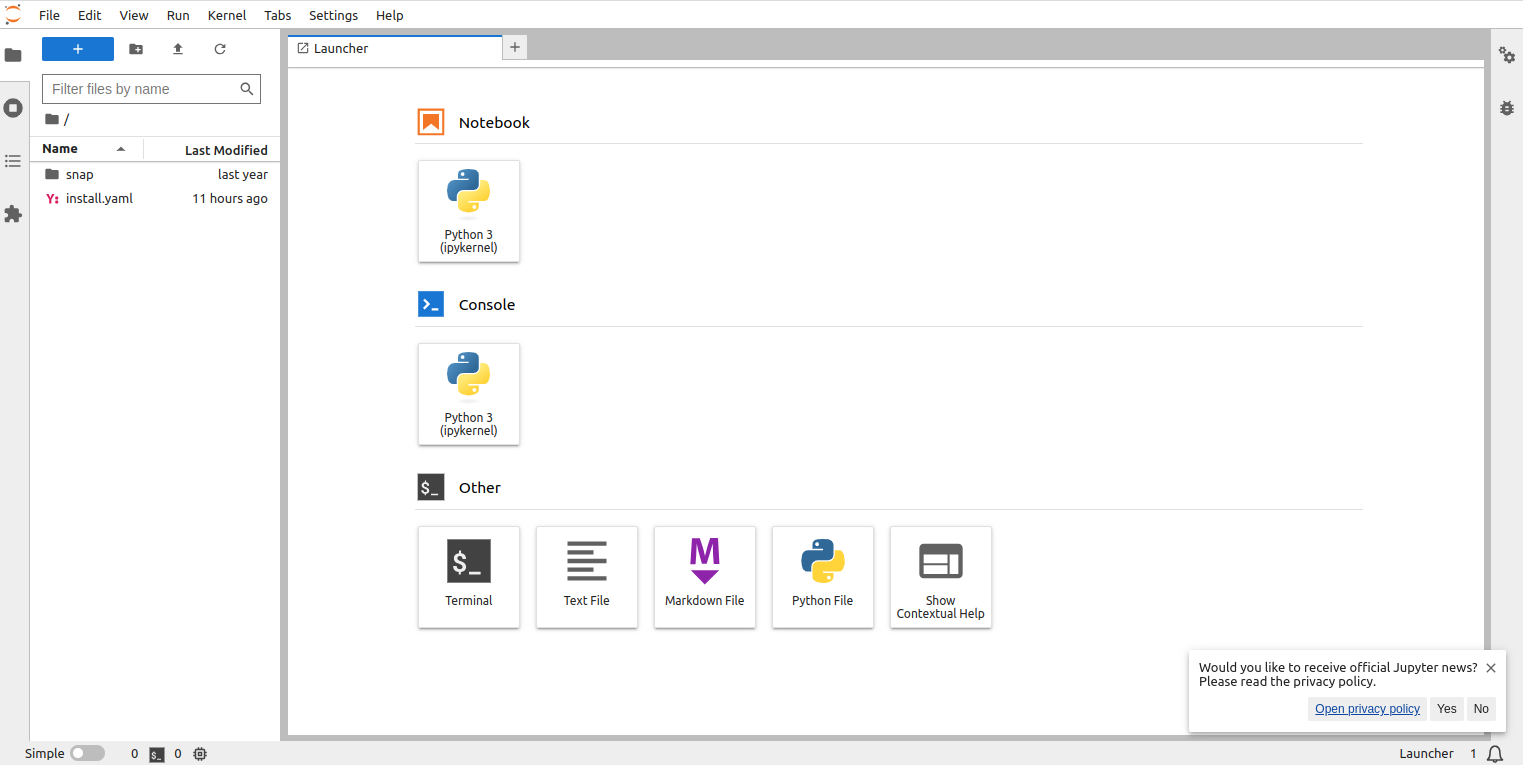

Provide the password you set during the installation and click on Log in. You will see the Jupyter Lab dashboard on the following screen:

Step 6 – Start the Python3 Notebook

You can now start the Python 3 Notebook and move on Part 3.

Part 3 – Configure Weaviate

Step 1: Install Weaviate Client Library

- Choose Your Preferred Language

Weaviate offers client libraries in Python, JavaScript/TypeScript, Go, and Java.

- Install the Weaviate Client Library

This can be done from either the command line or directly from the Jupyter Notebook. For ease, I recommend using the Jupyter Notebook.

pip install -U weaviate-client

- Install Required Modules

pip install pandas

pip install tqdm

Now test if your Python client can connect to Weaviate.

import weaviate from weaviate.classes.init import AdditionalConfig, Timeout client = weaviate.connect_to_local( port=8080, grpc_port=50051, additional_config=AdditionalConfig( timeout=Timeout(init=30, query=60, insert=120) # Values in seconds ) ) print(client.is_ready())

You should get this output:

True

You can pull the server’s metadata with this command:

import json metainfo = client.get_meta() print(json.dumps(metainfo, indent=2))

You should see output like this:

{

"hostname": "http://[::]:8080",

"modules": {

"generative-openai": {

"documentationHref": "https://platform.openai.com/docs/api-reference/completions",

"name": "Generative Search - OpenAI"

},

"qna-openai": {

"documentationHref": "https://platform.openai.com/docs/api-reference/completions",

"name": "OpenAI Question & Answering Module"

},

"ref2vec-centroid": {},

"reranker-cohere": {

"documentationHref": "https://txt.cohere.com/rerank/",

"name": "Reranker - Cohere"

},

"text2vec-cohere": {

"documentationHref": "https://docs.cohere.ai/embedding-wiki/",

"name": "Cohere Module"

},

"text2vec-huggingface": {

"documentationHref": "https://huggingface.co/docs/api-inference/detailed_parameters#feature-extraction-task",

"name": "Hugging Face Module"

},

"text2vec-openai": {

"documentationHref": "https://platform.openai.com/docs/guides/embeddings/what-are-embeddings",

"name": "OpenAI Module"

}

},

"version": "1.23.13"

}

Step 2 – Create some data in Weaviate

- Create a Collection

import weaviate import weaviate.classes.config as wc import os client.collections.create( name="Movie", properties=[ wc.Property(name="title", data_type=wc.DataType.TEXT), wc.Property(name="overview", data_type=wc.DataType.TEXT), wc.Property(name="vote_average", data_type=wc.DataType.NUMBER), wc.Property(name="genre_ids", data_type=wc.DataType.INT_ARRAY), wc.Property(name="release_date", data_type=wc.DataType.DATE), wc.Property(name="tmdb_id", data_type=wc.DataType.INT), ], # Define the vectorizer module vectorizer_config=wc.Configure.Vectorizer.text2vec_openai(), # Define the generative module generative_config=wc.Configure.Generative.openai() )

If successful you will see output like this:

<weaviate.collections.collection.sync.Collection at 0x7f6a0bc15e70>

- Import Data

We are going to impact a free opensource movie database called TMDB.

import weaviate

import pandas as pd

import requests

from datetime import datetime, timezone

import json

from weaviate.util import generate_uuid5

from tqdm import tqdm

import os

data_url = "https://raw.githubusercontent.com/weaviate-tutorials/edu-datasets/main/movies_data_1990_2024.json"

resp = requests.get(data_url)

df = pd.DataFrame(resp.json())

# Get the collection

movies = client.collections.get("Movies")

# Enter context manager

with movies.batch.dynamic() as batch:

# Loop through the data

for i, movie in tqdm(df.iterrows()):

# Convert data types

# Convert a JSON date to `datetime` and add time zone information

release_date = datetime.strptime(movie["release_date"], "%Y-%m-%d").replace(

tzinfo=timezone.utc

)

# Convert a JSON array to a list of integers

genre_ids = json.loads(movie["genre_ids"])

# Build the object payload

movie_obj = {

"title": movie["title"],

"overview": movie["overview"],

"vote_average": movie["vote_average"],

"genre_ids": genre_ids,

"release_date": release_date,

"tmdb_id": movie["id"],

}

# Add object to batch queue

batch.add_object(

properties=movie_obj,

uuid=generate_uuid5(movie["id"])

# references=reference_obj # You can add references here

)

# Batcher automatically sends batches

# Check for failed objects

if len(movies.batch.failed_objects) > 0:

print(f"Failed to import {len(movies.batch.failed_objects)} objects")

Upon completion, you will get an output like this:

680it [00:05, 135.25it/s]

Conclusion

To recap, now that we have imported the data into the Weaviate vector database, we can search and use the data. At this point, I recommend you consult Weaviate’s documentation to explore all the different ways you can query the data.

By following this guide, you’ve successfully set up Weaviate on your Atlantic.Net Cloud Platform. You can now explore the powerful features of Weaviate and build your own RAG applications. Remember to refer to the official Weaviate documentation for more advanced use cases and configuration options.